Verifying the app's results

Please note: That this workshop has been deprecated. For the latest and updated version featuring the newest features, please access the Workshop at the following link: Cost efficient Spark applications on Amazon EMR.

This workshop remains here for reference to those who have used this workshop before, or those who want to reference this workshop for earlier version.

Please note: That this workshop has been deprecated. For the latest and updated version featuring the newest features, please access the Workshop at the following link: Cost efficient Spark applications on Amazon EMR.

This workshop remains here for reference to those who have used this workshop before, or those who want to reference this workshop for earlier version.

In this section we will use Amazon Athena to run a SQL query against the results of our Spark application in order to make sure that it completed successfully. We’ll compare the results when you didn’t interrupt the Spark job when creating the cluster, with the results when you interrupt three Spot task nodes.

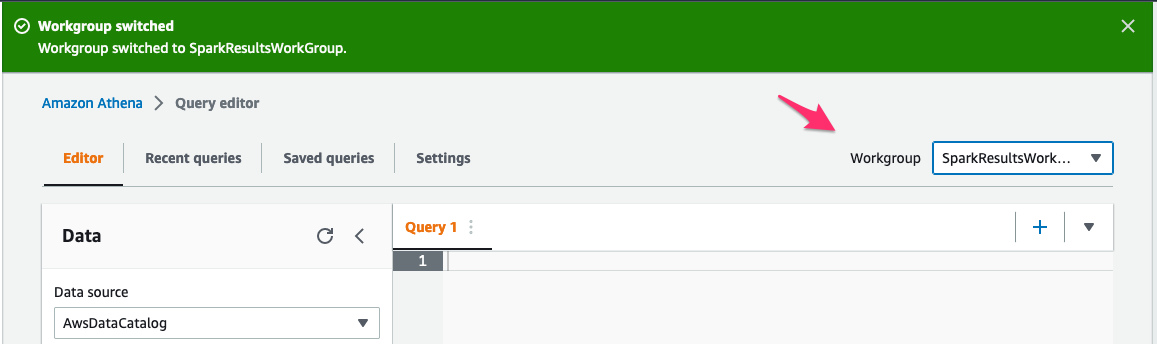

- In the AWS Management Console, go to the Athena service and verify that you are in the correct region where you ran your EMR cluster.

- On the right hand side of the screen there’s a

Workgroupdropdown, click it and change it toSparkResultsWorkGroupoption.

-

Go to the

Saved queriestab, and open theEMRWorkshopResultssaved query. This is the query to create the table that uses the S3 bucket as a source where the first Spark job saved its results. -

Click on the

Runbutton. -

Go to the

Saved queriestab again, and open theEMRWorkshopResultsSpotsaved query. This is the query to create the table that uses the S3 bucket as a source where the subsequen Spark job that you interrupted saved its results. -

To look at some of the results, run this query:

SELECT * FROM "EMRWorkshopResults" ORDER BY count DESC limit 100; -

And to confirm that the number of rows match, run the following commands:

SELECT COUNT(*) FROM "EMRWorkshopResults";SELECT COUNT(*) FROM "EMRWorkshopResultsSpot"; -

Both results match.